Teams

Epidemiology of Ideas

Where do ideas come from? Where do they go? And how do we work with them using a computer?

One stage in any sort of scholarly research is coming up with an idea -- a hypothesis -- about what might be going on in a set of raw data. A second stage is looking closely at the data to see if the idea is true. But the most important stage is looking at the data again, in light of existing scholarship, to understand why it's true and how it relates to the rest of reality.

Juola and Bernola (Proc. DH 2009) have proposed a radically new method for creating and testing ideas via computer (see http://www.twitter.com/conjecturator). This program (the Conjecturator) handles the first stage-and-a-half described above, creating a random conjecture about patterns that may or may not appear in text data, then testing them by examining a database of texts.

For example, it is well-known that men's and women's speech differ in interesting (presumably culturally-derived) ways as can be seen by frequency differences in certain categories. Linguists have listed many such differences (e.g. women use more intensifiers; men use fewer tag questions.) There is something of a cottage industry in finding and explaining these differences. But the scale for potential research is huge -- Roget's thesaurus lists more than 1000 different semantic ``categories'' most of which have never been studied in the context of gender and language. [For example, we are aware of no study of the use of animal terms (Roget category III.iii.1.2/366).] Do men and women's speech differ in this regard? At this writing, we do not know. If we learned that this were true, however, this would create a new and interesting question to explore. By using a computer, we can tentatively answer this and tens of thousands of related questions quickly, creating thousands of possibilities for further research.

This approach raises a number of critical philosophical and technical issues:

- What kind of "patterns" are interesting to study

- How can these patterns be identified by computer

- What kind of testing is appropriate to figure out if the conjectures hold

- As the size of the collection increases, how can the program scale

- What kind of technology is appropriate for this

- How can results from this sort of analysis be made useful

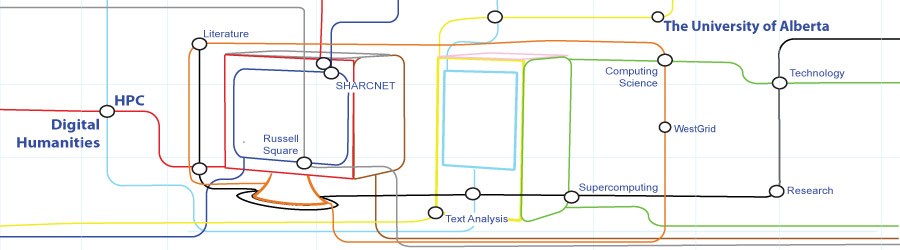

This creates a key opportunity for HPC. A collection the size of JSTOR contains more than five million articles. Any attempt to examine this database seriously will require supercomputing-scale resources and programs.

We see three major research challenges to be addressed at the workshop:

- Re-developing a version of the current Conjecturator prototype to handle HPC-scale data and queries

- Developing a suitable front end to the set of confirmed conjectures to let interested researchers sort through thousands or hundreds of thousands of findings

- Identifying criteria for "good" conjectures -- conjectures that are interesting, non-trivial, and likely to spawn further research.

Patrick Juola teaches Computer Science at Duquesne University (Pittsburgh, PA, USA). A former software engineer for AT&T and postdoc in the psychology department at Oxford University, his is noted for his interdisciplinary approach to scholarship. He is an active researcher in text analysis, including authorship attribution and profiling. He has two books to his credit, one on computer architecture and another on authorship, and is a frequent speaker on various aspects of digital humanities. He is also the original designer and a co-author of the Conjecturator prototype